by Fred Theilig – @fmtheilig

My home server seems to occasionally enter a steady state. The syslog logs, the IDS detects, the vulnerability scanner scans, the web server serves. I stop routinely checking the dashboards and only patch when the mood strikes me. I turn to YouTube looking for project ideas, but don’t often find anything interesting. So the server just sits, burning electricity.

While cleaning up around the server a project unexpectedly found me. As an aside, the rig needs a whole lot of cleanup. I may build a 24U rack from resawn 2x4s, but that’s for another time. Anyhow, my server uses one ethernet port for management, another for the VMs, and a third for the SPAN port. The machine, however, has four ports. What if I bound two together to double the throughput? The VMs themselves, that is, the qcow2 files, are hosted on a NAS, which also has bonded NICs. Disk access would theoretically double. Shouldn’t be too hard.

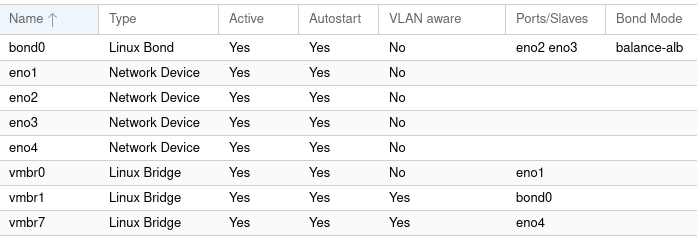

I can’t do ProxMox networking justice here, but essentially the network devices (eno1 to eno4 in my case) are attached to a Linux Bridge (vmbr0, 1, and 7), which can be assigned a VLAN. vmbr1 is my production bridge, so I tried adding eno3 to it, first connecting it to the switch. This worked and didn’t work. I didn’t lose connectivity, but I didn’t see any speed benefit as far as I noticed. All it was providing was a redundant link, which isn’t a bad thing. Time to google.

The solution was to create a Linux Bond, adding the two ethernet devices to it, then assigning the bond to the bridge. Read a better technical description here. This had the desired effect, as evidence the time required for Nessus Essentials to scan my network went from 55 minutes to 35 minutes. This was a double-edged win for me. At once I was “Wow, I (almost) doubled the speed of my VMs!” and also “Wow, how stupid am I to have gone years without implementing this?” Well, better late than never.

My Security Onion VM also needed a little help. The system is made up of twenty four containers, and occasionally a couple would show the status of “missing”. A reboot will usually resolve this, but in the meantime the system is either diminished or completely offline. Also, packet capture loss was unacceptably high. I saw two possible causes: the /nsm partition was frequently full and the VM had no swap partition. I don’t believe this was a provisioning error as the VM has an automated setup. Nevertheless, these may be the cause of its rather flaky behavior. Fixing this involves Logical Volume Management, which is a skills gap for me. However I recently completed a RHCSA bootcamp and my eyes were opened. I may do a LVM post in the near future.

Anyhow, using my new LVM skills, I added a physical drive to the VM, converted it to a physical volume, added it to the existing volume group, then expanded the /nsm logical volume. Additionally I created and added a 24 gig swap volume. /etc/fstab had to be updated to make the swap space persistent. I seldom ever see training turn into problem solving quite so fast. Security Onion is best run on a physical appliance with tons of memory and an SSD, so it will never be a perfect solution, but it appears to be operating better. Capture loss is still terrible (frequently passing 80%) but the fault appears to be with my old Cisco Catalyst.

Back on the topic of Nessus, I noticed that I receive far more detail on the Nessus VM itself compared to the other VMs. The reason for this should be obvious, but there is a solution and it’s called credentialed scanning. Many vulnerability scanners have a facility where they authenticate to target systems to gather vulnerability information they would not otherwise be capable of. This requires a separate service account and preferably an ssh key. I implemented credential scanning, but didn’t get a chance to see it in action. Not just yet.

The reason is because my install of ProxMox was still at version 7, which went end of life in July. Instructions I found online for an in place upgrade didn’t look too bad, and my VMs were safe on external storage, so why not give it a try? It did not go well.

There were precautions I could have done, but I didn’t take them. I did save my interfaces file but everything else was a complete loss. I was forced to rebuild from scratch and relearn how to mount the NAS and figure out how to import the existing qcow2 files.

The image files for each of a VMs hard drives are located in numbered folders that correspond to the VM ID of the virtual machine. They are named something similar to vm-101-disk-0.qcow2. All of my old files were still in the correct location, but unfortunately, all VM metadata was lost. The name, cores, RAM, VLAN, everything else about the virtual machine. The procedure is to create a new VM, giving it the correct VM ID, and giving it all required information (which can be changed later). Then detach and delete the hard drive. From an elevated command line on the Proxmox server itself, navigate to the numbered folder and execute the following command:

qm importdisk 101 vm-101-disk-0.qcow2 [Share name] -format qcow2This would present a detached hard drive to the VM, which I would add. Finally, change the boot order to boot from the ide2 device first, and we were in business.

I took this opportunity to delete VMs I either didn’t need or wanted to rebuild. I also spent time identifying VMs whose purpose I had forgotten. What was OSSEC and REMNUX for again? I may post about them in the future.

Perhaps I seemed rather cavalier about the ProxMox upgrade, but I try to resist my inherent frightfully fearful little piglet nature and take chances. I knew the qcow2 files were safe, and I built the environment once, I can rebuild it, perhaps better. I have no SLAs and there is no learning without pain. The end result is I am now running eleven VMs on ProxMox 8.2.7, and backups, and vulnerability and virus scans go almost twice as fast. I call that a win.